Introduction

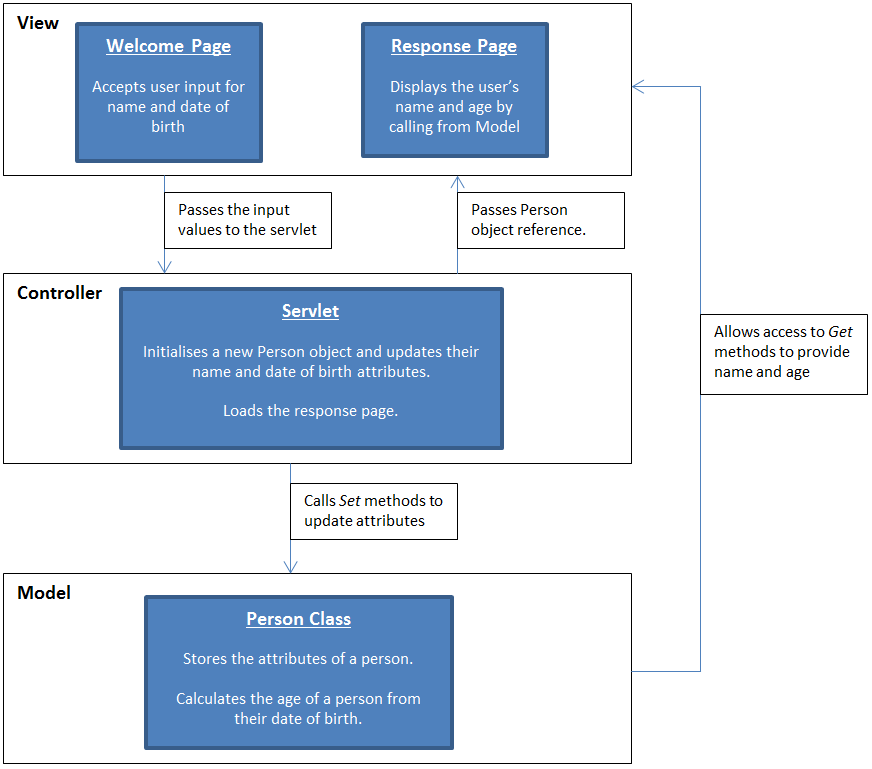

This blog will detail how to create a simple Web Application (Web App) on a Windows 7 64 bit system and deploy it to a GlassFish 4.0 server. The web app will be kept simple, so as to keep the focus of the tutorial on the concepts; as such, the web app will provide a web page prompting the user to enter their name and date of birth, before loading another page that repeats their name back at them with their age. The blog will cover how to set up, code, and deploy the web app using the NetBeans IDE, as well as how to manually deploy it using the Windows command prompt. It should be noted that unless stated otherwise, any additional settings that can be changed but are not noted in the guide should be left as their default.

This guide was written using the following:

Step 1: Initial Set up and Configuration

This step covers how to install and configure the software that was used in this blog (excluding Windows!).

Installation

Java

It is possible to download a bundle that contains the Java SDK and JDK, as well as GlassFish.

NetBeans can be downloaded with support for various technologies, and can come bundled with GlassFish 4.0. Feel free to download NetBeans with everything, but for this tutorial only the Java EE version is required.

Set Java Environment Variables

Only really necessary if you are using the command prompt, setting the Java environment variables allows Windows to automatically know where to look for Java and its libraries. Doing this saves you having to explicitly type out the path name to Java whenever you want to use it.

As previously noted, the NetBeans IDE can come bundled with the GlassFish server. This is the simplest method to install and configure GlassFish, as it will automatically set up a default server for us to use. Given that we already have GlassFish installed however, it is possible to add our existing GlassFish server to NetBeans (though if you run into trouble at this step, you could just reinstall NetBeans with the bundled GlassFish).

If you intend to run GlassFish from the command prompt, it is recommended to alter the path environment variable to prevent having to move to the GlassFish directory or state the file path whenever you want to use it. This is done using the same method as setting the Java environment variable:

Before we begin writing any code, we must create a project in which to store and organise our files.

Step 2: Writing the Application Body

This steps covers and explains the code that provides the workings for our web app.

The Person Class

This class is a JavaBean, a Java class designed to enable easy reuse that conforms to a specific standard: It has a constructor that takes no arguments, properties that are accessed through Gettersand Setters, and is Serializable. In our web app, the class is used to store information about the user, and allows other classes and pages to update and get information from it through Getter and Setter methods. To begin, we need to create the class for the code to go into:

At this time the Calendar, DateFormat, and Date will be underlined with a red line signifying an error. This is, again, because we haven’t imported the classes or specified their paths. Add the imports for java.util.Calendar, java.text.java.text.DateFormat, and java.util.Date respectively.

The code so far should look like this (minus the comments):

As noted previously, as the age attribute is not set by a servlet, we need to provide a method to calculate the age of the user from their supplied date of birth.

The PersonServlet Class

Step 3: Configuring the Web Pages

Now that we have our classes that store the inputted attributes and control our web app, we need to configure the web pages that will use them!

Creating and Configuring the userAgeResponse Page

The userAgeResponse page will be the page that is loaded once the user has submitted their name and date of birth, displaying in turn the user’s name back at them with their age. We begin, as usual, by creating a new file for our project:

![Get Bean Property Settings Window showing the settings for creating a Get Bean Property]()

Configuring the Index Page

With a page that responds to the user, we need to finish our web app by configuring our front web page, the indexpage, to allow user input. Navigate to the indexpage in the IDE, and again feel free to supply the page with a title. NetBeans can help us out again by providing means of generating much of the code to supply user input for us:

You may remember that we took in two parameters from the HTTPServletRequest in our servlet, the other being dateOfBirth.

With that, our index page, and our web app, are done! See below for what the code of the index page should look like:

Step 4: Deploying the Web App

This section will be split into two sections detailing two methods of deploying the application: the “manual” method, utilising the command prompt, and the “IDE” method, utilising NetBeans.

IDE

To deploy the web app to the GlassFish server, simply press the Run button. This starts up the GlassFish server and deploys the web app to the server using directory deployment before opening your web browser at the indexpage. Directory deployment is deploying a web app to the GlassFish server by utilising a structured directory instead of a web archive file (WAR). Alternatively:

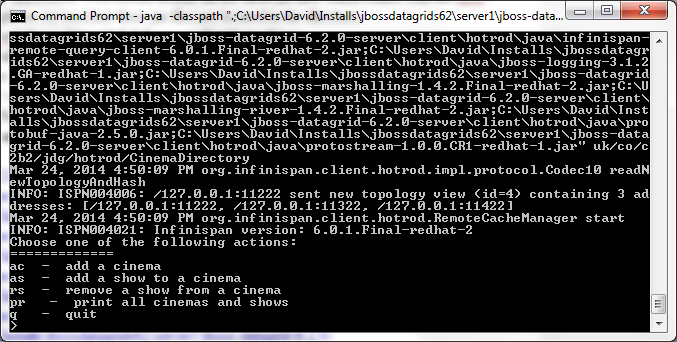

To begin with, we need to build the project, creating a WAR file. A WAR file is a type of Java Archive file (JAR) containing a packaged web application; it is an archive composed of the JSP, HTML and other files of the web application, as well as a runtime deployment descriptor (XML file) describing to the application server how to run the web app.

And with that, you have created a web app and (hopefully!) seen the satisfying "Command deploy executed successfully" message, signifying that you have just deployed your hard work to a GlassFish application server!

Andrew Pielage

Graduate Support Consultant

This blog will detail how to create a simple Web Application (Web App) on a Windows 7 64 bit system and deploy it to a GlassFish 4.0 server. The web app will be kept simple, so as to keep the focus of the tutorial on the concepts; as such, the web app will provide a web page prompting the user to enter their name and date of birth, before loading another page that repeats their name back at them with their age. The blog will cover how to set up, code, and deploy the web app using the NetBeans IDE, as well as how to manually deploy it using the Windows command prompt. It should be noted that unless stated otherwise, any additional settings that can be changed but are not noted in the guide should be left as their default.

This guide was written using the following:

- Windows 7

- GlassFish 4.0

- Java EE 7 32 bit

- JDK 1.7.0_45 32 bit

- NetBeans 7.4

Step 1: Initial Set up and Configuration

This step covers how to install and configure the software that was used in this blog (excluding Windows!).

- Go to http://www.oracle.com/technetwork/java/javaee/downloads/index.html

- To download the SDK, JDK, and GlassFish, click on the download link for Java EE 7 SDK with JDK 7 U45 and select Windows(not Windows x64, this guide is using 32 bit Java).

- Install to the default location like any other regular program

- If you decide to install in a different location, you will have to alter any explicit file paths in the guide to correspond to your own installation location.

- Go to https://NetBeans.org/downloads/index.html

- Click on the Downloadbutton for Java EE

- You may note that this also comes bundled with GlassFish 4.0. Handy, but redundant at this stage if you’ve followed this guide.

- Install like any other program, to the default location

- You can configure it not to reinstall GlassFish, or simply leave it as default; reinstalling GlassFish with NetBeans has the positive outcome of NetBeans auto-configuring a default GlassFish Server for you to deploy the web app to, though it does default to a different install location, potentially letting you install GlassFish twice.

Set Java Environment Variables

Only really necessary if you are using the command prompt, setting the Java environment variables allows Windows to automatically know where to look for Java and its libraries. Doing this saves you having to explicitly type out the path name to Java whenever you want to use it.

- Click on Start, and then right click on Computer and select Properties

- Select Advanced System Settings from the list on the left of the window.

- Click on Environment Variables

- Select the Pathvariable under System Variables, and click on Edit

- Add the path for the JDK bin – on a default installation that is: C:\Program Files (x86)\Java\jdk 1.7.0_45\bin

- Ensure there is a semi colon separating out the extra path directory from the previous directory

It is also prudent to add the JAVA_HOME environment variable whilst here.

- Click Newunder System Variables

- Enter JAVA_HOMEas the variable name

- Enter the file path of the JDK – on a default installation that is: C:\Program Files (x86)\Java\jdk 1.7.0_45

As previously noted, the NetBeans IDE can come bundled with the GlassFish server. This is the simplest method to install and configure GlassFish, as it will automatically set up a default server for us to use. Given that we already have GlassFish installed however, it is possible to add our existing GlassFish server to NetBeans (though if you run into trouble at this step, you could just reinstall NetBeans with the bundled GlassFish).

- Select Tools, then Servers from the NetBeans toolbar.

- Select Add Server, on the bottom left of the pop up window.

- Select GlassFish Server, and press Next.

- You can leave the Name as the default “GlassFish Server”.

- Browse to where GlassFish is installed and accept the license agreement before clicking Next.

- If you let the Java installer install GlassFish, you should find it at: C:\glassfish4

- Leave the domain location at “Register Local Domain”, and the Domain Name as “domain1”.

- Enter “admin” as the user name, and a password if you want, before finally clicking Finish.

- Note – If you let NetBeans set up the server, then when you first start up the server it will set a random String as the password with a username of admin. NetBeans won’t leave you in the dark though, it will auto fill in the password for you when it asks for it.

- If you want to look at the username or password, you can find them by clicking Tools, then Servers, and selecting the server from the list.

- Click on Start, and then right click on Computer and select Properties

- Select Advanced System Settings from the list on the left of the window.

- Click on Environment Variables

- Select the Pathvariable under System Variables, and click on Edit

- Enter the file path of the GlassFish bin folder

- NetBeans Install: C:\Program Files (x86)\glassfish-4.0\bin

- Java Install: C:\glassfish4\bin

- Enter the file path of the GlassFish server

- NetBeans Install: C:\Program Files (x86)\glassfish-4.0\glassfish

- Java Install: C:\glassfish4\glassfish

Before we begin writing any code, we must create a project in which to store and organise our files.

- Click on File, then New Project.

- Alternatively, use the keyboard shortcut Ctrl + Shift + N, or click on the New Project icon.

- From within the popup window, select Java Webfrom the Categories list, and then Web Application from the Projects list.

- Give your project a name, you can leave it as the default or give it your own name. For this blog, I will be naming it SimpleWebApp.

- Once you have entered a name, click Next.

- Select GlassFish Server and Java EE 7 Web as the Server and Java EE version respectively, before clicking Finish.

If all is well, the project should be successfully created and will appear in the Projects pane on the left hand side of the IDE. An html file called indexwill also be created and opened in the main IDE view ready for editing, though we will leave this for now.

This steps covers and explains the code that provides the workings for our web app.

The Person Class

This class is a JavaBean, a Java class designed to enable easy reuse that conforms to a specific standard: It has a constructor that takes no arguments, properties that are accessed through Gettersand Setters, and is Serializable. In our web app, the class is used to store information about the user, and allows other classes and pages to update and get information from it through Getter and Setter methods. To begin, we need to create the class for the code to go into:

- Right click on the project, SimpleWebApp, hover over New to expand the list, and select JavaClass.

- Enter Person as the class name, and enter org.mypackage.models as the package.

- Click Finish to create the class.

public class Person implements Serializable Now that we have a class to work with, we can begin filling out the code:

- Declare private variables of the following:

- A String called name– This will be used to store the name of the user

- A byte called age– This will be used to store the age of the user once it has been calculated. Unless filling in an obscure date of birth, a person is not realistically going to be over 127 years old, so only a byte is needed.

- A String called dateOfBirth– This will be the String representation of the user given date of birth.

- A Calendar called birthday– This will be the Calendar representation of the user’s date of birth, a more workable format for a date of birth.

- A DateFormat called birthdateFormat– The accepted format of the user’s date of birth.

- A Date called birthdate– This is the date of birth in a Date Format, used for converting the date of birth from a String to a Calendar.

- A Calendar called todaysDate– Stores today’s date, used for calculating the age of the user.

At this time the Calendar, DateFormat, and Date will be underlined with a red line signifying an error. This is, again, because we haven’t imported the classes or specified their paths. Add the imports for java.util.Calendar, java.text.java.text.DateFormat, and java.util.Date respectively.

- Create a constructor with no arguments, initialising the variables as:

name = "";

age = 0;

birthday = GregorianCalendar.getInstance();

birthdateFormat = new SimpleDateFormat("yyyy-MM-dd");

dateOfBirth = birthdateFormat.format(new Date());

birthdate = new Date();

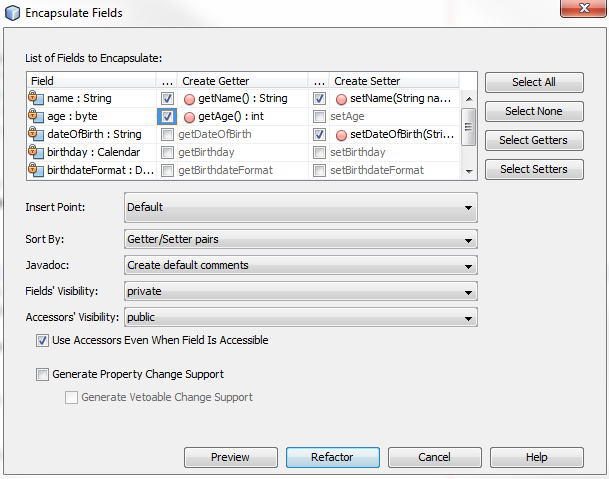

todaysDate = GregorianCalendar.getInstance(); - Right click on the name variable in the Source Editor in the bottom left of NetBeans, expand the Refactor list, and click on Encapsulate Fields. This window displays the variables in the class that can be encapsulated, providing the means to generate Getter and Setter methods for variables, and to automatically set the visibility of said variables, Getter, and Setter methods. We can leave the default settings, but with two additions:

- Create a Getterfor age– We don’t need a Setter as this is calculated inside the class, not set by an external controller.

- Create a Setterfor dateOfBirth– We don’t need a Getter within the scope of this tutorial, as we don’t have any pages that will access this attribute.

- Click Refactor to generate the code. Were our variables not already declared as private, the selection of private for the Field Visibility would have declared them as such for us. This will also have added JavaDoc annotations, metadata, for the Getter and Setter methods.

The code so far should look like this (minus the comments):

package org.mypackage.models;

import java.io.Serializable;

import java.text.DateFormat;

import java.text.SimpleDateFormat;

import java.util.Calendar;

import java.util.Date;

import java.util.GregorianCalendar;

public class Person implements Serializable

{

private String name; // The name of the user

private byte age; // The age of the user

private String dateOfBirth; // The date of birth of the user

private Calendar birthday; // The birthday of the user

private DateFormat birthdateFormat; // The format of the birth date

private Date birthdate; // The dateOfBirth in a Date format

private Calendar todaysDate; // Todays date

// Initialise any variables with default values

public Person()

{

name = "";

age = 0;

birthday = GregorianCalendar.getInstance();

birthdateFormat = new SimpleDateFormat("yyyy-MM-dd");

dateOfBirth = birthdateFormat.format(new Date());

birthdate = new Date();

todaysDate = GregorianCalendar.getInstance();

}

// The Getter method for the name

public String getName()

{

return name;

}

// The Setter method for the name

public void setName(String name)

{

this.name = name;

}

// The Getter method for the age

public int getAge()

{

return age;

}

// The Setter method for the date of birth

public void setDateOfBirth(String dateOfBirth)

{

this.dateOfBirth = dateOfBirth;

}

} As noted previously, as the age attribute is not set by a servlet, we need to provide a method to calculate the age of the user from their supplied date of birth.

- Create a private void method calculateAge().

- Create a try clause – as we are parsing from a String to a Date, we need to catch a Parse Exception if one is thrown.

- Within the try clause:

- Parse the dateOfBirth String in the format of birthdateFormat, and set this as birthdate

birthdate = birthdateFormat.parse(dateOfBirth);

- Use the setTime method of Calendar to set the birthday variable as the formatted Date value of birthdate

birthday.setTime(birthdate);

- End the try clause and catch the exception with a basic message (bad practice as exceptions should not just be ignored like this, but for this demo we can get away with it).

catch (ParseException ex)

{

System.out.println("Parse Exception when parsing the dateOfBirth");

}

With the String now parsed to a Calendar, we can make use of the Calendar methods to extract the year from the birthday and today’s date to calculate the user’s age.

- Subtract the year of the user’s date of birth from today’s year to get the difference in years, before then casting it as a byte. Set the age variable as this value.

age = (byte)(todaysDate.get(Calendar.YEAR) - birthday.get(Calendar.YEAR));

- Add the agevariable, currently representing the difference in years, to the user’s date of birth, the birthday variable, to get the user’s birthday for this year.

birthday.add(Calendar.YEAR, age);

- Compare today’s date against the user’s birthday this year, using the todaysDate and birthday variables respectively, to see if today’s date is before the user’s birthday, decrementing age if it is.

if (todaysDate.before(birthday))

{age --;

}

- The method should now look like this:

private void calculateAge()

{

try

{

// Convert the user supplied date of birth String to a Date in the specified format

birthdate = birthdateFormat.parse(dateOfBirth);

// Cast the Date value to a Calendar to allow easier use

birthday.setTime(birthdate);

}

catch (ParseException ex)

{System.out.println("Parse Exception when parsing the dateOfBirth");

}

// Calculate the age

age = (byte)(todaysDate.get(Calendar.YEAR) - birthday.get(Calendar.YEAR));

// Get the date of the user's birthday this year

birthday.add(Calendar.YEAR, age);

// Check if the user's birthday has passed this year

if (todaysDate.before(birthday))

{

// Reduce the age by one if it hasn't

age --;

}

}

Only one final thing remains to complete the Person class:

- Add in the method call for calculateAge before the returnstatement in the getAge() method.

calculateAge();

package org.mypackage.models;

import java.io.Serializable;

import java.text.DateFormat;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.Calendar;

import java.util.Date;

import java.util.GregorianCalendar;

public class Person implements Serializable

{

private String name; // The name of the user

private byte age; // The age of the user

private String dateOfBirth; // The date of birth of the user

private Calendar birthday; // The birthday of the user

private DateFormat birthdateFormat; // The format of the birth date

private Date birthdate; // The dateOfBirth in a Date format

private Calendar todaysDate; // Todays date

// Initialise any variables with default values

public Person()

{

name = "";

age = 0;

birthday = GregorianCalendar.getInstance();

birthdateFormat = new SimpleDateFormat("yyyy-MM-dd");

dateOfBirth = birthdateFormat.format(new Date());

birthdate = new Date();

todaysDate = GregorianCalendar.getInstance();

}

// The Getter method for the name

public String getName()

{

return name;

}

// The Setter method for the name

public void setName(String name)

{

this.name = name;

}

// The Getter method for the age

public int getAge()

{

// Call the method to calculate the age before returning it

calculateAge();

return age;

}

// The Setter method for the date of birth

public void setDateOfBirth(String dateOfBirth)

{

this.dateOfBirth = dateOfBirth;

}

// The method that calculates the user's age from their date of birth

private void calculateAge()

{

try

{

// Convert the user supplied date of birth String to a Date in the specified format

birthdate = birthdateFormat.parse(dateOfBirth);

// Cast the Date value to a Calendar to allow easier use

birthday.setTime(birthdate);

}

catch (ParseException ex)

{

System.out.println("Parse Exception when parsing the dateOfBirth");

}

// Calculate the age

age = (byte)(todaysDate.get(Calendar.YEAR) - birthday.get(Calendar.YEAR));

// Get the date of the user's birthday this year

birthday.add(Calendar.YEAR, age);

// Check if the user's birthday has passed this year

if (todaysDate.before(birthday))

{

// Reduce the age by one if it hasn't

age --;

}

}

}

The PersonServlet Class

A servlet is, like a JavaServer Page, a means of enabling dynamic content in a web application. The servlet in our application takes the user input from the HTML index page and updates the attributes of a Person object, before loading a new web page to display the response.

- Right click on the Project in the Projects pane, expand the New list, and select Servlet.

- Provide a Class Name of PersonServlet, a Package of org.mypackage.controllers, and click Next

- Check the box to Add information to deployment descriptor to generate the XML code providing the means of accessing the servlet from the indexpage.

- Declare the private variables for the servlet to take in from the index page, name and dateOfBirth.

- Declare and initialise the private variable to be used to identify what will be our Person object when passed to another page, personID.

private String name; // The user's name

private String dateOfBirth; // The user's date of birth

private String personID = "personBean"; // The ID of the Person object

- Initialise the name and dateOfBirth variables as the parameters of the same name from the HTTP request, with the getParameter method (be sure to put the parameters between ""!).

name = request.getParameter("name");

dateOfBirth = request.getParameter("dateOfBirth");

import org.mypackage.models.Person; Person person = new Person();

- With the Person object initialised, utilise its Setter methods to update the name and dateOfBirth attributes with those supplied by the user.

person.setName(name);

person.setDateOfBirth(dateOfBirth);

With the attributes set, we need to pass control to another page to load the response for this specific Person object. To accomplish this, we set our person object and its identifier, personID, as attributes of the request, and pass control to the new page.

- Set personID and person as attributes of the request.

request.setAttribute(personID, person);

- Pass control to what will be our responding page, userAgeResponse.jsp, and forward the request and response parameters to it.

RequestDispatcher dispatcher = getServletContext().getRequestDispatcher("/userAgeResponse.jsp");

dispatcher.forward(request, response);

package org.mypackage.controllers;

import java.io.IOException;

import javax.servlet.RequestDispatcher;

import javax.servlet.ServletException;

import javax.servlet.annotation.WebServlet;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import org.mypackage.models.Person

// Specify the name of the servlet and the URL for it

@WebServlet(name = "PersonServlet", urlPatterns = {"/PersonServlet"})

public class PersonServlet extends HttpServlet {

private String name; // The user's name

private String dateOfBirth; // The user's date of birth

private String personID = "personBean"; // The ID of the Person object

/**

* Processes requests for both HTTP <code>GET</code> and <code>POST</code>

* methods.

*

* @param request servlet request

* @param response servlet response

* @throws ServletException if a servlet-specific error occurs

* @throws IOException if an I/O error occurs

*/

protected void processRequest(HttpServletRequest request, HttpServletResponse response)

throws ServletException, IOException

{

// Retrieve the user input values from the Welcome Page

name = request.getParameter("name");

dateOfBirth = request.getParameter("dateOfBirth");

// Initialise a new Person object

Person person = new Person();

// Update the default values in the Person object with the user supplied values

person.setName(name);

person.setDateOfBirth(dateOfBirth);

// Set the person object and its identifier as attributes of the request

request.setAttribute(personID, person);

// Load the userAgeResponse Page

RequestDispatcher dispatcher = getServletContext().getRequestDispatcher("/userAgeResponse.jsp");

dispatcher.forward(request, response);

}

// … Default HttpServlet methods …

}

Step 3: Configuring the Web Pages

Now that we have our classes that store the inputted attributes and control our web app, we need to configure the web pages that will use them!

Creating and Configuring the userAgeResponse Page

The userAgeResponse page will be the page that is loaded once the user has submitted their name and date of birth, displaying in turn the user’s name back at them with their age. We begin, as usual, by creating a new file for our project:

- Right click the SimpleWebApp node in the Projects window, expand the New list, and click on JSP, short for JavaServer Page.

- Name the JSP userAgeResponse, leave the other settings as default, and click Finish.

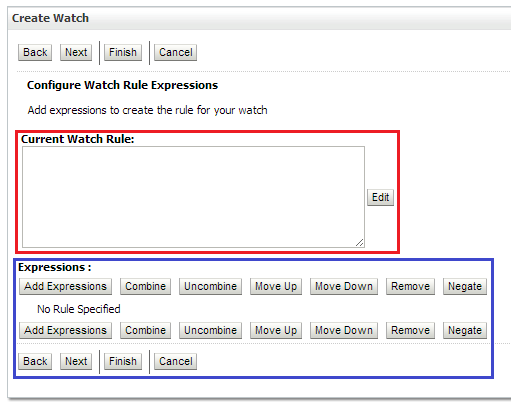

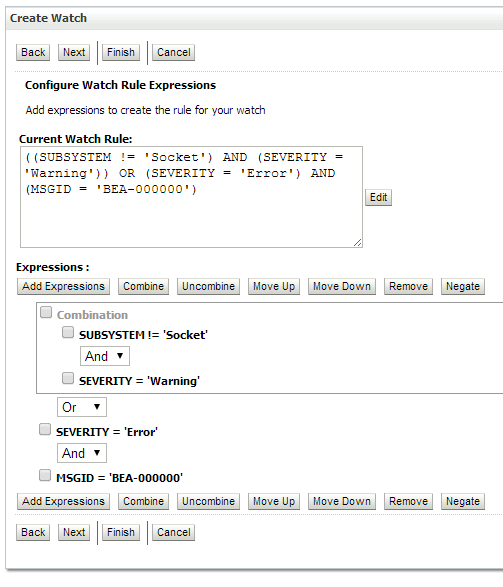

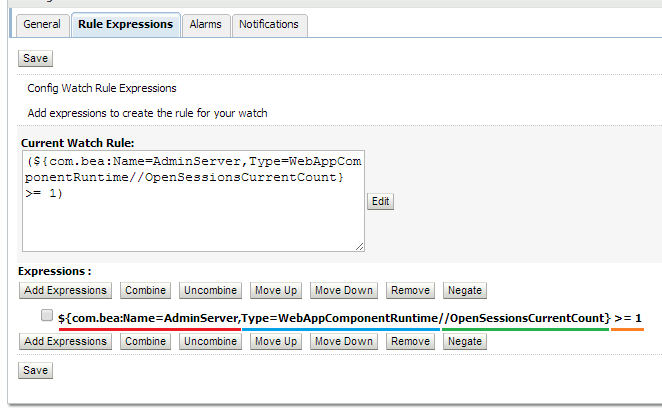

This creates the JSP and opens it in the editor for us to tinker with. Feel free to give the page a title, though it doesn’t actually affect the functionality at all. NetBeans allows us to generate HTML and other useful web content functions by dragging them from a window called the Palette into the editor at the point we want them generated. We will make use of this to save us typing out the code to utilise the JavaBean we created earlier:

- Open the palette by clicking on Window from the NetBeans toolbar, expand the IDE Tools list, and select Palette

- This can also be done with the keyboard shortcut Ctrl + Shift + 8

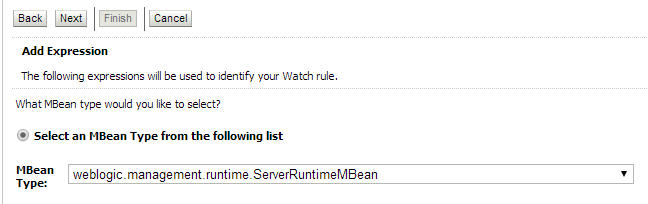

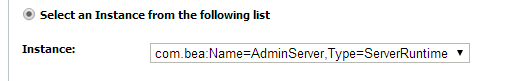

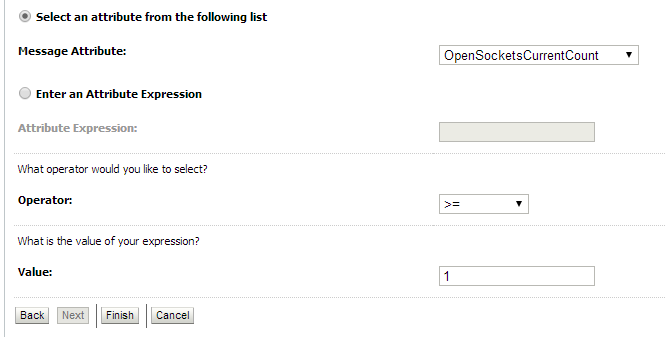

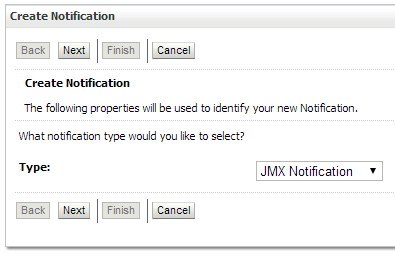

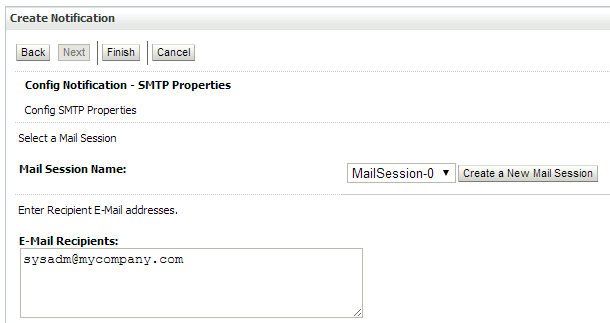

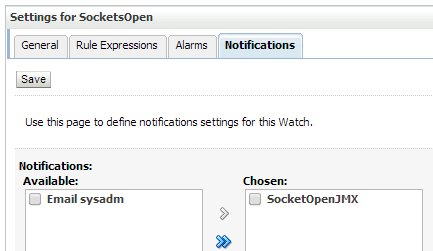

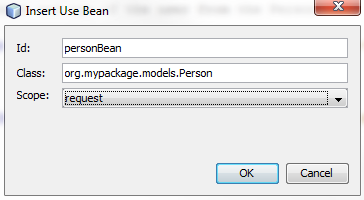

- Expand the JSP tab, and drag a Use Bean into the editor above the HTML tags of the page. A window will appear, enter personBean as the ID, org.mypackage.models.Person as the Class, and set the Scope to request. This will be used to access, as you may be able to tell from the parameters, the Person class, and the Get and Set methods within it. It will in particular be accessing the person object we created and sent to the page from our servlet, utilising the ID of personBean, which was the value of personID passed to it from the servlet.

<jsp:useBean id="personBean" scope="request" class="org.mypackage.models.Person" />

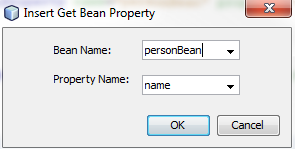

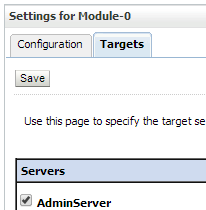

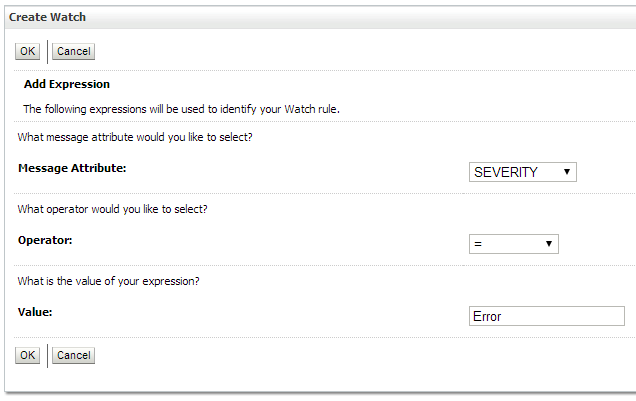

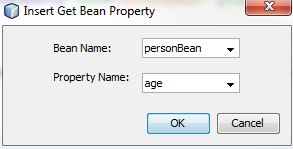

- Drag two Get Bean Propertys between the bodytags with the Bean Name for both set to personBean, and the Property Name set as name for one, and age for the other. This calls the Getter method, for the nameand age attributes respectively, from the class of the object with the ID specified in the Use Bean, person.

<jsp:getProperty name="personBean" property="name" />

<jsp:getProperty name="personBean" property="age" />

- Add some friendly text and a line break between the two Get Bean Properties for ease of reading to finish off the page.

Hello <jsp:getProperty name="personBean" property="name" />!

<br>

You are <jsp:getProperty name="personBean" property="age" /> years old!

And with that, we have completed the userAgeResponse page, and it should look like this:

<%@page contentType="text/html" pageEncoding="UTF-8"%>

<!DOCTYPE html>

<!--

Gain access to the person object

-->

<jsp:useBean id="personBean" scope="request" class="org.mypackage.models.Person" />

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>Your Age</title>

</head>

<body>

<!--

Pull the name and age of the user from the Person object

-->

Hello <jsp:getProperty name="personBean" property="name" />!

<br>

You are <jsp:getProperty name="personBean" property="age" /> years old!

</body>

</html>

Configuring the Index Page

With a page that responds to the user, we need to finish our web app by configuring our front web page, the indexpage, to allow user input. Navigate to the indexpage in the IDE, and again feel free to supply the page with a title. NetBeans can help us out again by providing means of generating much of the code to supply user input for us:

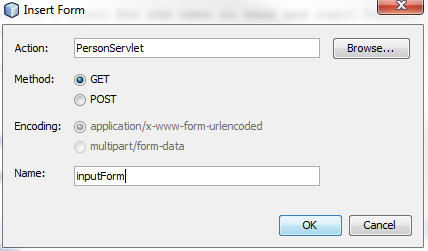

- Open up the Palette if it isn’t already open, and expand the HTML Forms menu. Remove any default text inside of the body of the code, before pulling a Form into the body from the Palette. Set our servlet, PersonServlet, as the Action, GET as the Method, and give it a Name of inputForm. This specifies that the inputs from this form will be sent to our servlet via the HTTP GET method.

<form name=“Input Form” action=“PersonServlet”>

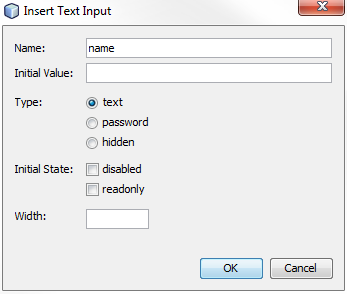

- Make some space between the form tags, and place a Text Input item inside. Give the input a Name of name, and hit OK, leaving the other fields as default. The Name field in this instance is giving the variable name to be passed, so is the same name parameter extracted from the HTTPServletRequestrequestin our servlet.

<input type=“text” name=“name” value=“” />

You may remember that we took in two parameters from the HTTPServletRequest in our servlet, the other being dateOfBirth.

- Create another Text Input with the name of dateOfBirthto satisfy this foresight of ours. Once generated, alter the type from text to date, providing some input validation, and provide it a default date value of the format yyyy-mm-dd to give the user a visual cue of the input format if the browser does not support providing automatic formatting for the HTML date tag.

<input type=“date” name=“dateOfBirth” value=“2014-01-29” />

- Type in some descriptive text before the text inputs to give the input fields a label for the user to see, and place a line break between the fields to improve readability.

Enter Your Name: <input type="text" name="name" value="" />

<br>

Enter Your Date of Birth: <input type="date" name="dateOfBirth" value="2014-01-29" />

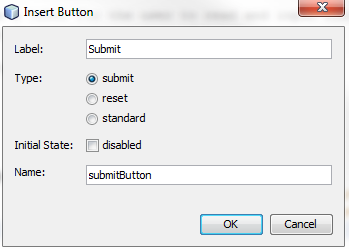

- Finally, place another line break after the text inputs and drag a Button in, giving it a Label of Submit, leaving the Typeas Submit, and a Name of submitButton.

<input type=”submit” value=”OK” />

With that, our index page, and our web app, are done! See below for what the code of the index page should look like:

<!DOCTYPE html>

<html>

<head>

<title>Welcome Page</title>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width">

</head>

<body>

<!--

Create an input form to take in the user's name and date of birth

and send them to the servlet

-->

<form name="Input Form" action="PersonServlet">

<!--

Provide a label for the user to read and input fields,

passing on the name and date of birth

-->

Enter Your Name: <input type="text"

name="name"

value="" />

<br>

Enter Your Date of Birth: <input type="date"

name="dateOfBirth"

value="2014-01-29" />

<br>

<!--

Provide a button as a means of submitting the user's data

-->

<input type="submit" value="Submit" name="Submit Button"/>

</form>

</body>

</html>

Step 4: Deploying the Web App

This section will be split into two sections detailing two methods of deploying the application: the “manual” method, utilising the command prompt, and the “IDE” method, utilising NetBeans.

IDE

To deploy the web app to the GlassFish server, simply press the Run button. This starts up the GlassFish server and deploys the web app to the server using directory deployment before opening your web browser at the indexpage. Directory deployment is deploying a web app to the GlassFish server by utilising a structured directory instead of a web archive file (WAR). Alternatively:

- Right click on the project in the Projects pane, and click on deploy. This will start the GlassFish server, if it isn’t already running, undeploy any current version of the web app already deployed, and deploy the web app to the server.

- Click on the Run button, and the web app will now load in your browser.

To begin with, we need to build the project, creating a WAR file. A WAR file is a type of Java Archive file (JAR) containing a packaged web application; it is an archive composed of the JSP, HTML and other files of the web application, as well as a runtime deployment descriptor (XML file) describing to the application server how to run the web app.

- In NetBeans, right click on the project in the Projects window and click on Clean and Build.

- Enter the command asadmin start-domain. The asadmin command is a GlassFish utility that lets you run administrative tasks, such as starting and stopping servers. As we are using the default GlassFish server, domain1, we do not need to enter a domain name.

- Navigate to the dist folder containing the SimpleWebApp.war file.

- Alternatively you can type out the file path to SimpleWebApp.war each time it is used.

- Type the command asadmin deploySimpleWebApp.war.

- If the SimpleWebApp is already deployed, you can force a redeploy using the following command - asadmin deploy --force=true SimpleWebApp.war.

- Open your browser and navigate to http://localhost:8080/SimpleWebApp

And with that, you have created a web app and (hopefully!) seen the satisfying "Command deploy executed successfully" message, signifying that you have just deployed your hard work to a GlassFish application server!

Andrew Pielage

Graduate Support Consultant